In a world buzzing with digital chatter, ChatGPT stands out as a powerhouse of conversation. But have you ever wondered what fuels this chatty genius? Spoiler alert: it’s not just coffee and good vibes! Understanding how ChatGPT uses energy can shed light on the remarkable technology behind those witty responses and insightful answers.

Table of Contents

ToggleOverview of ChatGPT and Energy Consumption

ChatGPT operates as a powerful conversational AI, leveraging advanced algorithms and substantial computational resources. Notably, data centers running these models require considerable energy to perform tasks efficiently. Each interaction with ChatGPT entails numerous calculations, leading to a significant energy consumption profile.

Energy use varies based on model size and complexity. Larger models demand more resources, which translates to higher electricity needs. Research indicates that training AI models can consume energy equivalent to several households’ annual consumption, stressing the importance of sustainable practices in AI development.

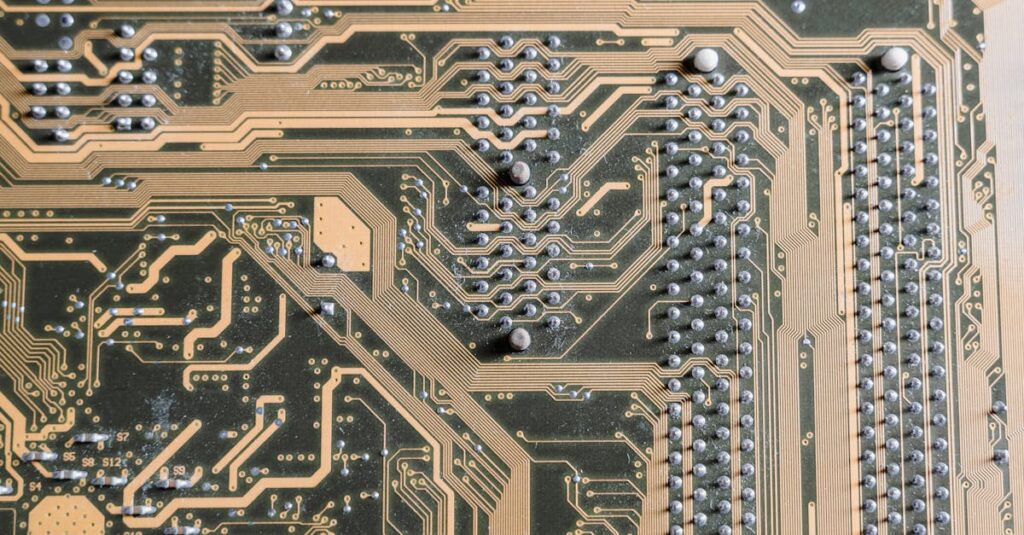

While ChatGPT generates responses, it’s essential to acknowledge the hardware behind its operation. Graphics processing units (GPUs) and specialized chips fuel these calculations. The efficiency of these machines affects overall energy usage, prompting companies to explore greener technologies.

Moreover, improvements in software algorithms contribute to energy efficiency. Optimizing code minimizes computational load, leading to reduced energy consumption during both training and inference phases. Companies continue to invest in sustainable energy sources, such as solar and wind, to power their data centers, thus decreasing the carbon footprint associated with AI technologies.

In conversations about AI, energy consumption is crucial. Understanding how ChatGPT uses energy aids in the broader conversation about sustainability within technology. Efforts within the industry focus on balancing performance with environmental responsibilities, emphasizing the need for conscious energy management strategies.

The Process of Energy Usage in ChatGPT

Energy usage in ChatGPT occurs during both model training and inference phases, with distinct demands in each.

Model Training Phases

Significant energy consumption arises during training, which involves processing vast datasets. High-powered GPUs and specialized chips work extensively, executing complex computations and adjustments. Data centers often require considerable electricity to maintain optimal performance while ensuring cooling systems operate effectively. Larger models amplify this energy demand, as they require more resources for training iterations. Research indicates that optimizing algorithms can lower energy requirements during these phases. Companies are increasingly focusing on developing energy-efficient training methods to mitigate environmental impact.

Model Inference Phases

Inference phases also impact energy usage, where the model generates responses to user inputs. Each interaction prompts the system to perform computations, demanding energy resources for real-time processing. Infrastructure for serving these requests relies heavily on reliable data center operations and responsive hardware configurations. Although individual queries consume less energy than training, the cumulative effect of millions of interactions can be substantial. Efficient software optimizations contribute to reducing the energy footprint during inference. Adopting renewable energy sources is becoming a common strategy among companies managing ChatGPT systems.

Factors Affecting Energy Use

ChatGPT’s energy usage relies on several critical factors. These include hardware considerations and software optimization, both of which directly influence efficiency and energy consumption.

Hardware Considerations

Energy consumption largely hinges on hardware components. High-performance GPUs and specialized chips play a significant role in processing data. They provide the necessary computational power but also demand considerable electricity. Data centers equipped with advanced hardware lack energy efficiency without proper management strategies. Cooling systems also contribute to energy use, as they maintain optimal operating temperatures for equipment. Evaluating and updating hardware regularly can enhance energy efficiency and reduce overall electricity needs for running ChatGPT.

Software Optimization

Software optimization serves as a vital factor in reducing energy use. Efficient algorithms can significantly decrease the amount of energy required during both training and inference. Streamlined code minimizes unnecessary computations, saving resources. Companies continuously develop smarter software solutions that prioritize energy-saving techniques. Adopting these innovations not only lowers energy costs but also promotes sustainability in AI technology. Emphasizing optimization strategies gives developers the tools necessary to balance performance and efficiency.

Environmental Impact of ChatGPT’s Energy Use

ChatGPT’s energy consumption significantly influences its environmental footprint. Understanding this impact is essential for promoting sustainability.

Carbon Footprint Assessment

Carbon emissions generated during ChatGPT’s operation stem from energy used in its data centers. High-performance GPUs and specialized chips contribute to this energy demand. Research suggests large-scale AI models can generate substantial carbon footprints, particularly when powered by fossil fuels. Promoting renewable energy usage helps mitigate these emissions. Companies focusing on carbon-neutral strategies actively seek ways to reduce their overall impact. Transitioning to energy-efficient systems and optimizing training algorithms can further lessen carbon output. As the AI landscape evolves, continuous monitoring of carbon footprints is vital for responsible development.

Comparison with Other AI Models

When comparing energy use, ChatGPT exhibits unique characteristics among AI models. Larger models generally require more computational resources, leading to increased energy consumption. A study indicated that some competing models necessitate up to 20% more energy during training. Despite this, optimizations in ChatGPT’s architecture enable efficiency gains. Efficient software and hardware choices in ChatGPT’s deployment often result in lower energy loads compared to others. The emphasis on sustainable practices sets ChatGPT apart in an increasingly competitive AI field. Tracking energy usage across various models remains crucial for understanding their relative environmental impacts.

Future Developments in Energy Efficiency

Continuous advancements in energy efficiency offer opportunities for ChatGPT’s enhancement. Innovative hardware solutions are emerging, aimed at reducing overall energy consumption in data centers. New GPU architectures provide substantial improvements in performance per watt, lowering costs associated with electricity.

Software improvements are also pivotal in this progress. Machine learning algorithms evolve to prioritize energy efficiency, reducing the power needed for training and inference tasks. Seamless integration of more efficient coding practices can cut energy use without compromising performance.

Implementing renewable energy sources plays a crucial role in future developments. Companies recognize the benefits of solar and wind power for data centers, driving down carbon emissions significantly. Strategies focusing on carbon neutrality can further enhance ChatGPT’s sustainability profile.

Collective efforts in research and collaboration contribute to advancements in energy-saving technologies. Industry leaders share best practices and innovations, fostering a culture of continuous improvement in energy management. Developing partnerships between technology firms and environmental agencies offers additional pathways to explore sustainable practices.

Regulatory pressures also influence energy efficiency trends. Governments increasingly mandate energy-efficient practices in tech industries, pushing companies to adopt cleaner technologies. Monitoring energy usage will become essential in assessing compliance and driving further improvements.

Ongoing research into quantum computing presents another avenue for energy efficiency. Researchers investigate how quantum technologies might outperform traditional computing methods, potentially revolutionizing energy consumption patterns in AI systems. As these developments unfold, they promise a more sustainable future for AI operations, including ChatGPT.

ChatGPT’s energy consumption highlights the intricate balance between advanced AI capabilities and environmental responsibility. As the demand for conversational AI grows its energy requirements will continue to be scrutinized. The focus on energy efficiency through innovative hardware and software solutions is essential for sustainable development.

Companies are increasingly adopting renewable energy sources and optimizing their operations to reduce carbon footprints. This commitment to sustainability not only enhances performance but also aligns with global efforts to combat climate change.

By prioritizing energy management and embracing new technologies the future of AI can be both powerful and environmentally conscious. The journey toward greener AI practices is just beginning and will shape the landscape of technology for years to come.